A digital learning environment for educating engineers

Background and justification of the project

Providing high quality education with an increasing number of students in engineering and less staff becomes more and more challenging. Low staff/student ratio requires a more efficient interaction of staff with students in their learning environment. Additionally, student groups are increasingly heterogeneous which requires a versatile student support system that can be tailored to the students’ need, preferably by the student. Finally, the demand for educational programs with a high level of choice of content is increasing. Students want to select courses that enable them to contribute to solving major societal problems and courses that are interesting to them. In the current rigidly planned educational program this freedom is limited, with good reason. Running courses and taking exams multiple times a year simply requires too much staff. Creating a digitized blended learning environment can change this.

Long term vision

Why is it that children and a large adult population effortlessly spend over 40 hours a week on playing games and find it much harder to concentrate on learning skills and acquiring knowledge that are much more useful for society and for their own development? This question was raised by Jane McGonigal[1]. Her research showed that the game environment provides key elements that are not always present in our educational environments. These elements are:

- Community: Peers/seniors that help you out, group quests with peers/seniors; this corresponds partially to study-groups, group-assignments, although these are done with peers in the same year (horizontal) rather than with senior students (vertical).

- Angels that give you hints, tips, and tricks, that coach you through a level; this is often the teacher /assistant helping-out in guided self-education.

- Challenges: after some exercises that train your proficiency an ‘end-boss’ needs to be defeated, an important aspect here is that the exercises are at the skill level of the player, the end-boss is a bigger challenge of a slightly higher level that gives a high emotional reward when overcome. The exercises prepare for this. Typically, we see this in education too, although the bigger challenge is often to overcome the stress caused by the time limit imposed in exams and the enormous impact that failing a test has on the progress of the student in the course program.

- Appraisal: if a level is completed: points/stars are given, kudos are given from peers and angels, competition for stars leads to champions. This is usually present in the form of intermediate tests, self/peer-appreciation given for solving exercises and helping peers to get to a solution. This is however in general not publicly recognized, formalized, or automated.

- Unlimited repetition: though not identified as a key element by prof. McGonigal, in game environments typically levels can be retaken at will, even when they have been successfully completed, one can retake and improve. In the end, all levels need to be completed. The number of tests that can be taken in the educational system is classically (very) very limited. This leads to a high stress factor with students. The size of the test is usually limited, where more extensive testing on all topics would reflect a student’s proficiency more accurately. Also, this way, students are prevented from economizing time-on-task on popular exam topics.

The game environment automatically creates the elements above without much need for personal attention by the teacher. This would alleviate the teacher’s time spent on these automated processes and creates more time for individual or small group guidance and support.

[1] www.ted.com/talks/jane_mcgonigal_gaming_can_make_a_better_world/up-next.

Objectives and expected outcomes

Now, how can we incorporate and improve these elements into the educational system? Significant developments have been made in facilitating the digital classroom. For the course Basic Chemical Reactor Engineering at TU/e, the CANVAS environment is used, where on a webpage, digitally accessible course material is readily provided. The course is subdivided into modules. Each module contains:

- References to Book Chapters (or Webpages/wiki’s in the future),

- Weblectures that explain/summarize the theory in 5-7 minutes,

- Pencasts of exercises with applications of the theory,

- Digital exercises with digital checking and feedback, and

- A digital forum on which Qs&As are posted

- A Test (the ‘end-boss’).

If a prerequisite skill or knowledge is needed in a module, reference to supporting modules should be given to refresh or learn. It is now easy to set a certain skill-level before a module can be enrolled and to make it content-specific rather than course specific.

If all courses are constructed this way, then any course can be followed at any time at any speed at any place by anyone. This highly empowers the students to assemble their own personal track and pace.

The application goes even further, education does not end after graduation: life-long learning is a natural continuation. Continuous learning becomes easier to access for employed professionals. Also, skills need to be maintained and preferably continuously certified which is now easy to achieve. Compare the IChemE accreditation system for chemical engineers where skills and knowledge for chartered engineers are tested every two years.

With the availability of more and more digital content in courses we can reorganize the way in which this content is learned. Rather than thinking in terms of courses that usually bundle a wide range of topics and intended learning objectives, these can be problem related. Through solving problems at increasingly higher levels, students nurture their skill tree and it will grow.

As an example, if we want to design an electrolysis process in which water is split into hydrogen and oxygen, and the hydrogen is dried, compressed to 700 bar and liquefied, we can do this at different skill-levels (Student Readiness Levels, SRL).

SRL I: Unit based operation: details of unit design are not required, only elementary mass and heat balances of the units in the process need to be evaluated and auxiliary equipment needs be chosen, requires e.g. basic thermodynamics, Bernouilli equation for flow.

SRL 2: SRL 1 + basic design of specific units, requires knowledge of mass transfer, heat transfer, reaction kinetics (for electrolysis),

SRL 3: SRL 2 + advanced design of specific units, requires advanced thermodynamics, use of detailed engineering correlations and numerical techniques

SRL 4: SRL 3 + process control, process dynamics, start-up/shut-down, safety

SRL 5: SRL 4 + costing, economic optimization, invention of alternative (sub)processes and comparison with an existing process

The topic can easily change from water electrolysis to e.g. methylamine production from methanol and ammonia. The steps and SRL levels are essentially the same.

Concluding, in the long term (2030), I would like to see a structured blended learning environment for engineering courses, based on a skill tree. By (inter)national notifying bodies accreditation to certain skill trees should be given, that allows students to exercise a certain profession adequately and to be internationally employable. The basic and advanced engineering courses should be completely automated.

The focus of my project for the next year will be on the efficiency of formative and summative digital assessment.

Project design and management

The project will roughly follow the following timeline:

- A showcase for a blended learning environment with digital testing (August 2019)

- A protocol/best-practice for digital testing (March 2019)

- A first sketch of the skill tree set-up (January 2020)

- A broader implementation of this environment to obtain ‘critical mass’ (at least 5 courses, August 2020)

- Proper statistics on the efficiency of the new learning environment, it would be desirable to have additional courses piloting the environment in parallel, in the chemical engineering & chemistry faculty but also at physics, mechanical engineering, mathematics (August 2020)

Results and learnings

- The course has a clearly structured webpage in which the structure of the course is well-explained. This new approach to studying takes some time to get used to, but is generally appreciated by students (ref. student survey).

- The STEP environment in combination with the USB-stick is very functional as it is and can be used efficiently. Some general remarks and recommendations:

- allow students to practice with the STEP environment and the answering and checking of questions.

- distribute some STEP USB sticks to teachers to verify that they are functional well in advance of the start of the course (e.g. 1 month).

- ask open questions rather than multiple choice (numerical answers, formulae).

- do not penalize incorrect answers, the extra time needed to find the error is already a penalty in itself.

- have a sufficiently large database of questions (300+) spread over all topics.

- show leniency towards students that missed out on an opportunity in case of software/hardware problems, give the teacher a mandate to allow these extra attempts without approval of the Exam Committee. The Exam Committee can still be activated by the student if required.

- any copying of exam questions should be avoided.

- the data base of questions should be extended every year. Questions should constantly be evaluated on ambiguity, clarity, and difficulty.

- The skill tree – a first draft for the chemical reactor engineering course was made. Still needs more detailing.

- Broader implementation: Ongoing, possible candidates are: Transport Phenomena (in cooperation with Mechanical Engineering), Thermodynamics, Calculus.

- Statistics:

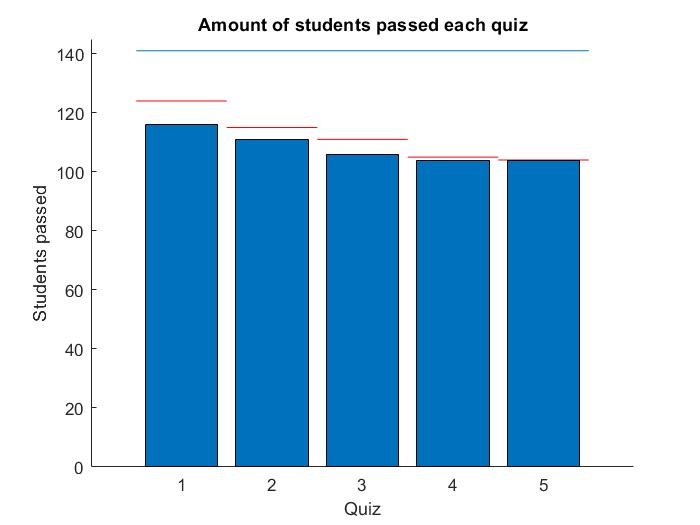

The students could pass the course by passing five quizzes, each linked to a specific module, with a grade of 50/100 or higher, with a total average grade of 55/100, with each module weighted equally. Students only gain access to a quiz after passing its predecessor.

Overall -- This year the success rate for the digital testing environment is very high. Traditional passing rates were 30-40% (ref. student admin needed). This year, out of the 141 subscribers to the course, 104 students (73.8%) passed the course. The predominant part of the 36 failing students did never (17) or only once (18) attempt the first QUIZ.

Overall Statistics After exam (June 27th): 141 participants

Passed quiz 1: 116/141 = 82,3%

Passed quiz 2: 111/141 = 78,7%

Passed quiz 3: 106/141 = 75,2%

Passed quiz 4: 104/141 = 73,8%

Passed quiz 5: 104/141 = 73,8%

In the re-sit in August one additional student passed quiz 4 and 5 giving 105/141 passing rate.

Of the students that passed QUIZ 1, 90% passed the course. One student passed QUIZ 1 but did not continue.

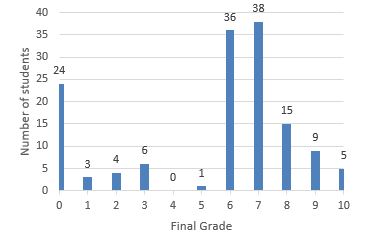

The average grades for the course for students that obtained a final grade is 7.1. The distribution of grades is given to the right.

Retakes -- The students can retake any QUIZ at will, meaning here that they redid the quiz after having already passed it. It is interesting to see how many times this occurs and how much the grade improves:

QUIZ Re-takes: 1x 2x 3x >4x Average grade improvement

1 18 4 - - +2.2

2 21 5 2 1 +1.7

3 14 2 - - +2.6

4 12 1 - - +2.0

5 24 3 1 - +1.4

The number of retakes is actually lower than expected. The grade improvement is significant, indicating that indeed the student had a better understanding of the topic than indicated by their previous grade. On the other hand, in the student survey a comment is made about the luck factor for getting a difficult or really difficult question on the topic.

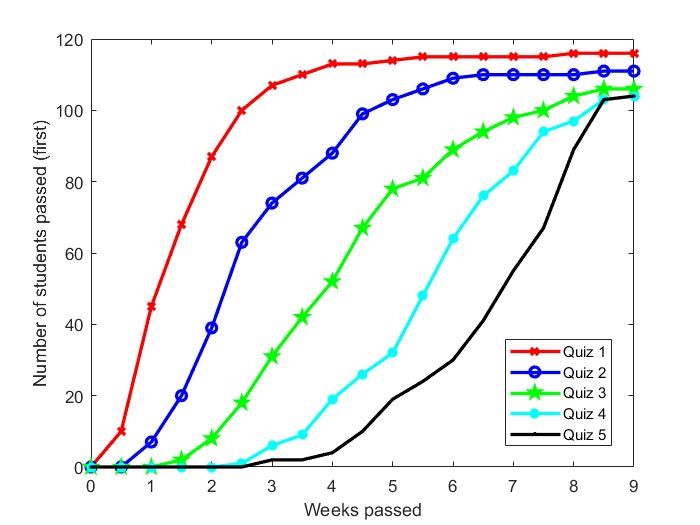

Time – The students could attempt a quiz twice per week, giving a total of 18 attempts. The time at which each quiz is passed by the number of students is given in the graph below.

The shape of the curves is typically sigmoidal. The fastest students start passing the course in weeks 3-4. About 50% of the students that passed Quiz 1 pass the course in week 7.

Concluding remarks

Success? It may be too soon to claim success for this method. If in the next years these statistics repeat themselves, it would be safe to conclude that the time-on-task that the students experience in this system of very regular testing would be the main reason for its success. The students experience regular feedback on their level of understanding, the frequent summative testing becomes formative when necessary; students obtain a better understanding of what they need to know and do to pass a specific topic. The testing of the knowledge and skill level is by far more extensive than with a single exam at the end of the course.

Student-Teacher Interaction – During the course students were able to reserve time with the teacher. Only a handful of students chose to do this. Most students chose to get help from peers using Whatsapp and other media. Second was the Q&A threads on the forum. Two PhD students regularly checked for questions on the forum and provided usually feedback within one day. This stimulated that the student and also other students asked more questions.

Memorizing answers to questions – Some students observe that a number of fellow students have memorized the solutions to certain standard questions, without really understanding the solution. Even though the exact problem text is not available to the students, some memory of the question will remain. After discussing these questions amongst each other, it becomes apparent that the knowledge level is under par in their opinion. Although the memorizing of answer formulae is already impressive for the large number of questions, one can easily mitigate this phenomenon by increasing the number of questions but also by making more questions with a similar wording but with subtle different boundaries. Only by following through the systematic problem approach the correct answer can then be obtained.

Digital vs. conventional – I would also like to add that in recent years of ‘conventional examination’ with written exams at the end of the course, students had taken to memorizing a standard solution model to a problem taken from an exercise, and copying that onto their exam paper. Where the exam question differed from the exercise, convenient writing mistakes were made and a solution was obtained. Normally, for the first general parts points would be given and this way they would scrape enough points to pass the course, or they could get ‘lucky’ if the exam question was very similar to the exercise. This was a first trigger for me to desire to give feedback on the answer in an exam, thus eliminating these close-enough answers.

Finally… Did it save time? – After the initial hickups of the testing environment protocol and the (considerable) time investment in constructing an unambiguous question database, the digital testing now runs very smoothly and almost effortlessly. The method is very scalable and generally applicable. It saves me a tremendous amount of time, even compared to the single conventional exam. The students immediately know their result, the information on student progress and other statistics on specific questions and topics are readily obtained, allowing for advanced fine tuning of the learning process. I can now focus on giving feedback on specific questions from students, however, that appeared not to be in high demand, which is fine. Students generally managed to get the required support through peers and the discussion forum. There is a risk though to lose contact with the student population. I am not sure if this is a problem or not and how to change this if it is. Over the years different forms of guided self-study group formats have been tried with this course with always the same low student attendence. I hear this from other colleagues in the university too. Maybe we overrate the necessity and efficiency of face-to-face contact with students with regard to knowledge transfer and is it merely for our and sometimes the students’ entertainment…